During the early ages of touchscreen technology, Amazon CEO Jeff Bezos said, “It’s almost as if the device disappears and becomes this natural extension of your hand and finger”.

Well, with the rapid advancement of technology, the touch screen may become obsolete anytime soon. A group of researchers from Seoul National University and Stanford University, KAIST has invented a bio-printed smart skin that will use Artificial Intelligence (AI).

Scientists predict that this smart skin will be able to type on invisible keyboards, identify objects through touch and communicate with people by mere gestures.

Electronic skins can detect users’ movements as they remain attached to important body joints. However, so far, scientists had experienced hard times making electronic skin stretchable and flexible enough to be used without significant limitations.

The unprecedented success of the American-Korean team lies in the invention of a mesh of electronic circuits that can be printed on the human hand by spraying conductive liquid. The thickness of the mesh is just one nanometer (nm).

Implications of the discovery in telemedicine and gaming

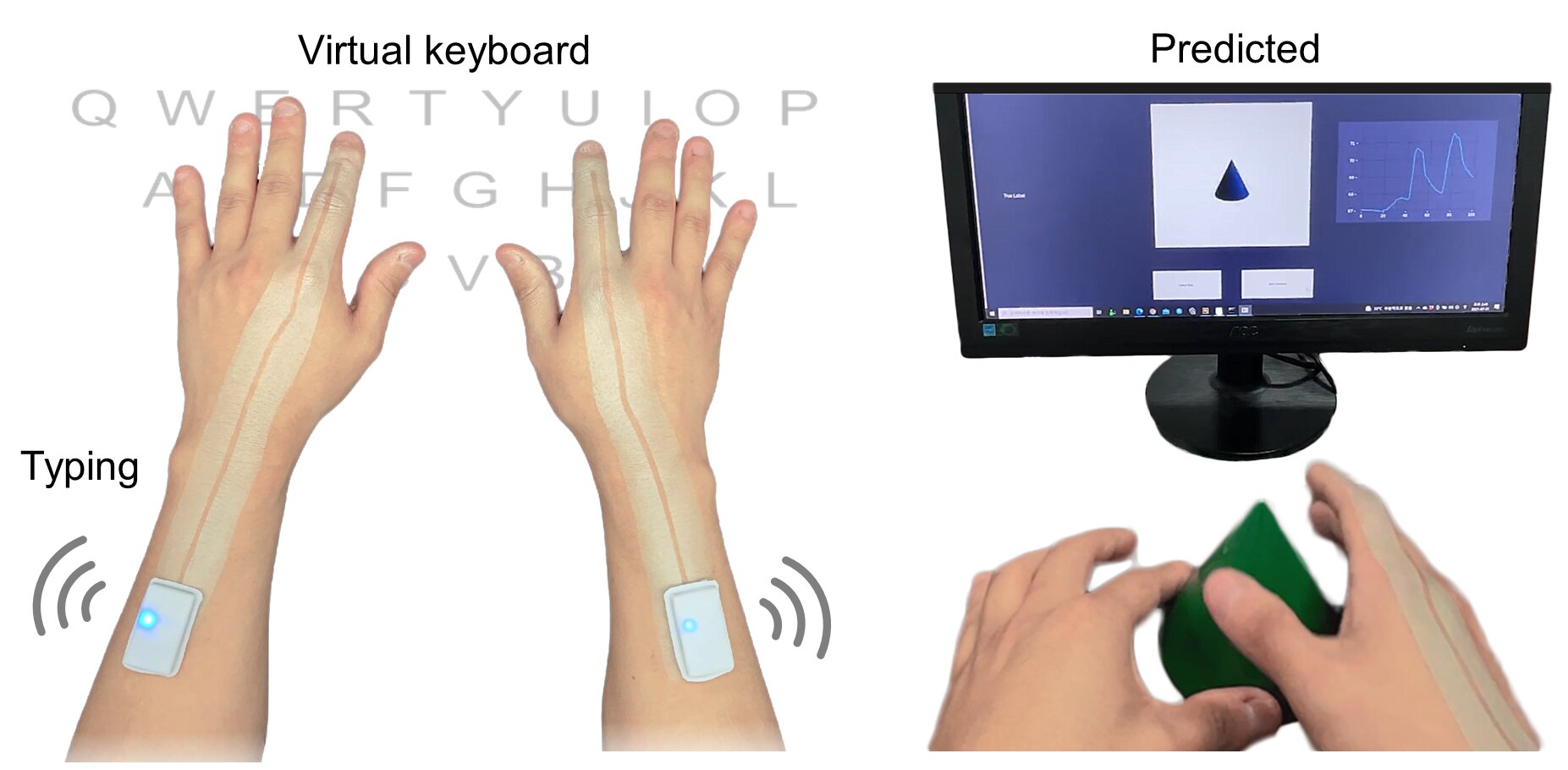

When stretched, the conductive mesh produces an electrical signal that is transmitted wirelessly via Bluetooth. The state of stretch is dependent on the extent of the user’s movement.

The integrated AI quickly learns the pattern of the user’s hand or body movements. Consequently, it can execute various tasks out of thin air if the user continually adheres to the same motion a few times.

The research team has also implemented Virtual Reality (VR) technology and tested a couple of things.

- Typing letters on a computer screen using hand gestures without a keyboard

- Drawing the exact shape of an object on the screen when it is touched.

In both instances, the research team has got successful results. Evidently, this technology enables people with speech disorders to interact with sign language in virtual spaces. In addition, the technology could be widely used in telemedicine, robotics and gaming fields in the future.

The entire research along with the results is available in the Nature Electronics journal.