European law enforcement agencies have expressed concern about the risks posed by websites that use Artificial Intelligence (AI), including ChatGPT, due to the sudden increase in demand for this technology.

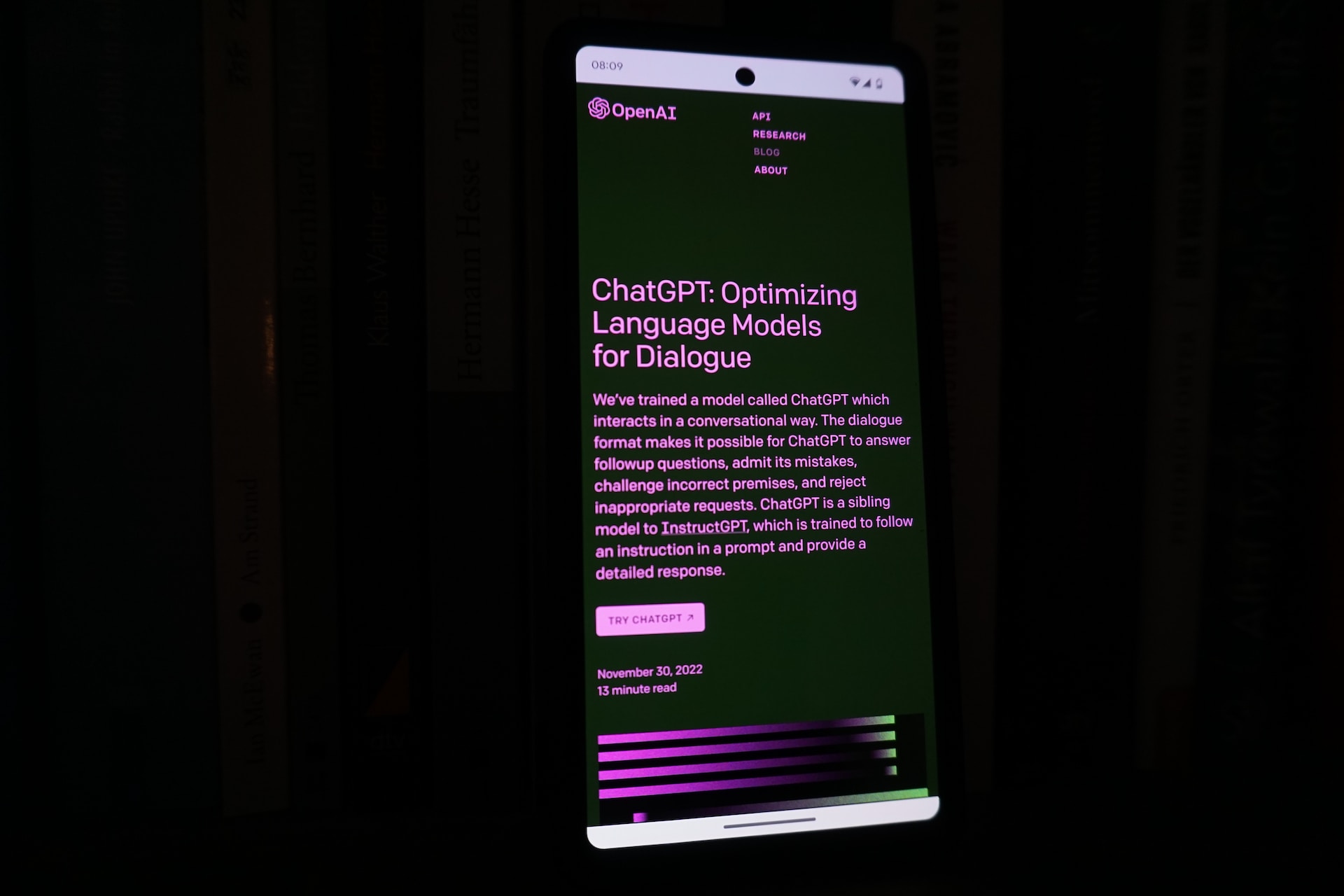

Despite the potential risks, ChatGPT has gained over 1.6 billion users since December. Many people have used the tool for tasks such as writing essays, poetry, data analysis, and coding. Also, European companies are in a frantic battle to win the AI race.

In March, the European Union Agency for Law Enforcement Cooperation (Europol) issued a public alert regarding AI platforms such as ChatGPT, warning that they could enable unauthorized access to data and files, and facilitate criminal acts.

According to Europol, ChatGPT could potentially provide criminals with vital information for their next move, enabling them to commit a range of crimes including burglary, terrorism, internet crime, and child sexual abuse.

Last month, the Italian privacy rights board Garante fined OpenAI, the creator of ChatGPT, millions of dollars for privacy infringements on its platform, resulting in a temporary ban.

The Need for AI Regulations

Critics in Spain, France, and Germany have also raised concerns about data privacy infringements. Additionally, European Data Protection Board has established a committee to coordinate restrictions across all 27 EU countries.

Dragos Tudorache, a European statesman and co-supporter of AI law, described this as a wake-up call for Europe, and said that Parliament will finalize AI permission, as they must understand what is happening and how to regulate AI technologies.

Although AI such as Amazon’s Alexa and online chess games have been used in our daily lives for many years, generative AI tools such as ChatGPT, have redefined the technology.

Experts have warned that while AI technology, such as ChatGPT, has vast knowledge that few people possess, it also poses a risk by unlocking doors for all sorts of crimes, such as identity theft and copyright infringement in schools.